I teach a course where I allow the use of generative AI. My university rules allow this and the students are instructed that they must cite the use of generative AI.

I have set the same Laboratory Report coursework for the last two years. And students submit their work through TurnItIn. so I can see what the TurnItIn Ai checker is reporting. Not that it is necessarily correct.

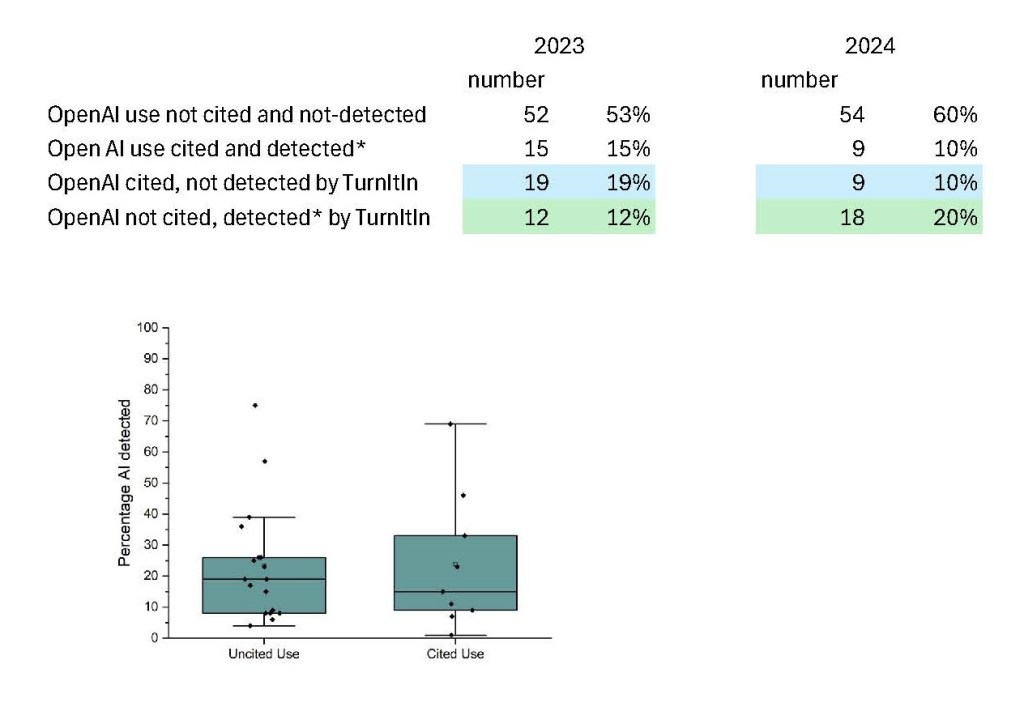

Anyway, for anyone interested, this is the data for 2023 and 2024.

Okay, so numbers are less than 100 in total, so everything has to be cautiously investigated, and it is only two years of data.

It looks like there is about half the class that did not use genAI in this coursework and that hasn’t changed over the two years, which is interesting as a questionnaire of the class the class showed that they are using genAI more in their day to day life.

There is a slight decline in the number of students citing its use and this also being detected by TurnItIn. I am not sure that is significant.

There is a larger decrease in the cases where AI is cited and this is not detected, however I am not sure this is TurnItIn getting better. Last year, more stduents used genAI in their analytical workflow rather than their writing, and that might be reflected here.

The stand out for me is that 20% of the class used genAI according to TurnItIn and they did not cite its use. Is TurnItIn detecting correctly? I think for the majority of the cases detected it is. If it is, is this a start of a trend?

Leave a comment